TL; DR

When you have more data that you could ever read, you use algorithms to learn. You can use them to learn: what causes something; what belongs together & even approach general learning from books & speech & video.

Introduction

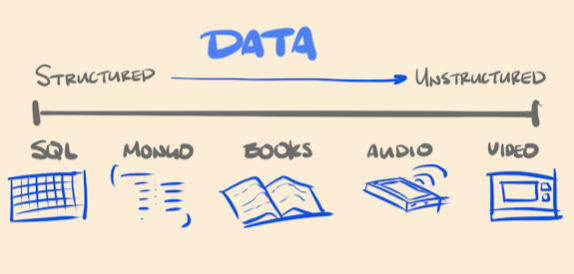

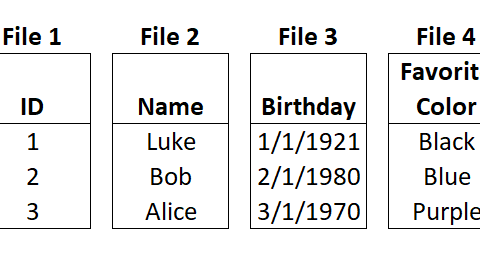

The world collects a lot of data. At some point we call it “big” data, even though there isn’t much consensus when it becomes more than just “data”. The data we talked about in the Databases article is straightforward since it lines up neatly in rows and columns or “objects”. There is useful data though that is not so structured, things like: books & video & pictures.

We collect data so we can learn things that will help us reach our goals. Yesteryear, you’d take a 100 or 1,000 row spreadsheet full of detail about your customers and find insight like, say, moms with 3 kids were your most loyal, but the learning still took a healthy dose of mental effort. When your data is captured in 1000 videos or 1,000,000,000 rows of data – reading is no longer an option.

With big data like that, there are some major jobs to be done 1speaking of grouping, these all overlap and often can belong to more than one category, but I’m trying to simplify here that are not only useful but necessary to start driving insights from your data.

Regression

Have you ever settled a argument saying, “Well, the real answer is somewhere in the middle. We both have a point.” These gray area answers apply to a lot of situations. In data, you can capture these using regression analysis. Each thing that could make a difference in your outcomes is represented as a variable. How important is a customer’s age to why they purchased our new software? How about gender? Home country? Each of these variables can be represented in regression model that after calculating can say, “Ahh, we can explain 35% of the reason by age, 25% by gender & 10% by home country.”

This is commonly associated with: standard statistical approaches & structured data.

Classification

If you go into many schools you can see human brains classifying each other into groups; however, some kids belong in multiple groups – how do you line them into one? Data scientists use tools like; KNN (K-Nearest-Neighbor) to put data points into the group that they are nearest to – “Are you mostly a nerd? Well then we’ll put you with the nerds. (whispers to self – yesss!)” There are a whole list of other tools here that ultimately get at the same thing of putting like with like: Naive Bayes, Random Forest, Support Vector Machine

This is commonly associated with structured and unstructured data & can be used by and with both Regression & Machine Learning.

Machine Learning

Machine Learning, Deep Learning, Neural nets, these all operate on a similar principle: layered (thus the term “deep”) inputs that depend on the output of each layer underneath. Something like this: is there something in this pixel or not? Something. Ok, next layer. (lots more layers that I’m zooming by…). Is the shape a circle or a square? A circle, ok next layer. Is the shape a Zero or an O? An O. Got it, our Machine learning algorithm has processed this picture and determined that this is an O not a Zero. In this way machine learning is loosely modeled after the human brain, taking sequential inputs and stacking up the learning until a conclusion is made.

This is used on both structured and unstructured data and the driver behind much of the popular results we see today behind: image recognition, speech recognition, & more.

Training

Much of this work requires first a training data set that you run your algorithm against to test and refine before setting it loose on real data. You do this to determine how well it predicts and classifies when you know the real answer so that when you release it on data where you don’t (or hasn’t happened yet) you can see how it is likely to perform.

So what?

A few things to note: good, clean data & a good business case are much more boring than getting to the sexy algorithms part but they are key to making data science worth it. Once those are done the training or choosing of a model gets you much faster to your goal.

We are just starting with the application of this in industry everywhere and as the algorithms & data both get more effective the change is just starting too.

Wisely apply this and you can reap the rewards of being at the edge of tech.